Dan Turner, Loughborough University London

Introduction

The creative industries (CIs) have long used a broad range of technologies to create, distribute, and attract audiences. There are however a set of emerging technologies which are disrupting the traditional methods of production, distribution, and consumption of creative products. Recent reports on the United Kingdom’s (POST, 2022) and European Union’s (European Commission, 2021) creative and cultural industries identify technological areas such as Immersive Technologies (XR), Artificial Intelligence (AI), Cloud Technology, and 5G as having the potential to affect the future of the creative and cultural industries, as well as our wider society. CRAIC is developing a new project with XR Stories at The University of York which aims to explore, through the use of in-depth case studies, how technologies such as these are currently being deployed and utilised within different areas of the CIs sector. Specifically, the kinds of technologies being used, their application, deployment, and the types of productions they are being used within. This essay will provide the background and rationale for the project as well as the research questions it looks to answer.

Background

Investment and Growth

The term CreaTech was, to the best of our knowledge, first used during a series of CIs inward-investment events and later during the 2017 London Tech Week. Similar to terms such as FinTech, HealthTech, and EdTech, CreaTech aims to capture the growing impact and importance that technology is having on the CIs. A report by Tech Nation highlights that not only does the term apply to the application of technology to creative outputs but also to the creation of new technologies to aid in the production, deployment and consumption of creative products (Tech Nation, 2021). While technology has always been involved with the production of creative work in some form, be that analogue or digital, the use of the term CreaTech by the Creative Industries Council and others, it could be argued, signals a new recognition of the role of technology in creative development.

Even during the economic challenges of the last several years CreaTech companies, according to The CreaTech Report (Technation, 2021), raised £981.8m in VC investment during 2020, an increase of 96% since 2017 and, according to their data, exceeded a forecast of £1.12bn to reach £1.14bn in 2021. AI & ML companies received the highest proportion of the investments with £478.28m, however XR technologies raising a combined total of over £130m highlights the growing area of immersive technologies. According to The Immersive Economy report the VR industry is predicted to be worth £294m by 2023, though it is worth noting that the Covid-19 pandemic is likely to have had an impact on this prediction with Oxford Economics predicting a Gross Value Added shortfall of £29bn across the CIs. The UK did, however, raise the most money out of any European nations and was third in the world behind only the US and China. Though it is worth noting however that CreaTech companies, as reported in An analysis of CreaTech R&D business activity in the UK using data from 2010 to 2021, suggests that companies tend to be over-represented in early stage investment types, such as seed and angel investment. This over-representation supports the idea that CreaTech businesses continue to face barriers when it comes to financing and scale. Even when adjusting for factors such as age of the company, whether they are based within or outside of London, the report’s findings were that investment events involving CreaTech companies tended to raise between 22% to 34% less.

Alongside private investment there is also a drive by Government bodies to grow the research and innovation capabilities nationally across a variety of UK sectors. The Industrial Strategy Challenge Fund was launched through UKRI in 2018 to fund a suite of Challenges (projects) that sit within the Government’s four themes of industrial strategy. The two projects relating to the CIs were the Creative Industries Clusters Programme (CICP) and The Audience of the Future Challenge (AotF). CICP launched in 2018 with £56m of investment from the Arts and Humanities Research Council (AHRC) to bring together academic and industry partners to drive innovation and skills using a regional and sector-based approach, while the AotF was an industry-led programme with a £39.3m investment from Innovate UK to explore and develop new immersive technologies and experiences. Recently UKRI also announced CoSTAR, as part of their wider Infrastructure Fund, which will be a state of the art facility fitted with real-time digital technologies such as motion and volumetric capture, alongside other XR technologies. It is imagined it will act as a central hub and will be supported by a network of regional labs across the UK.

This growing investment within the sector, within a challenging economic backdrop, shows the UK’s CIs as a resilient sector and one that has potential for growth for both the creation of content and research and innovation to develop the next generation of creative productions and technologies. However, a question could be asked around how long will this growth continue if CIs companies continue to be under-represented in later stage investments.

Examples of using Advanced Technologies for Creative Experiences

Two experiences have recently been released which are useful examples of how the technologies mentioned can be adapted and integrated to create novel creative experiences and show the different scales at which R&D is taking place within the sector. March 2021 saw a run of Dream, an interpretation of Shakespeare’s A Midsummer Night’s Dream, that was funded as part of AotF in order to explore the possibilities of interactive and remote live performances for mass audiences. What makes this project particularly interesting with regards to the adaptation and application of technologies is that Dream was originally scheduled to run during Spring 2020 for in-person and online audiences. On account of the Covid-19 pandemic, this had to then be rethought and recreated for online audiences only and, on account of the social distancing regulations, done so remotely. Dream was deployed via web browsers for Desktop, mobile, and tablet and, as presented at Beyond 2021, reached 65k people over 10 performances from 92 different countries. The global reach was possible due to changing the local time of the performances to fit in with international audiences.

A range of technologies were utilised such as motion capture to track the actors live within the performance space and face tracking to capture facial performances. These were combined with a virtual environment and assets created in Unreal Engine which were presented on video screens on the sides and a main video wall to the rear of the performance area. The performance area was 7m x 7m and the virtual environment was 7km x 7km with both being lit by complementary physical and virtual lighting rigs. Actors can interact with and play digital instruments using the data generated through the motion capture system. Using such an array of technologies together presented a variety of challenges which needed to be addressed during the process of taking the performance from R&D and into production, and deployment. Some of these challenges were discussed during a presentation at Beyond 2021.

Though exploring all the challenges is outside the scope of this essay, one challenge relevant to using motion capture for live performance was the issue of drift. Drift in this context is unwanted movement on the horizontal plane of the model within the motion capture software (Damgrave and Lutters, 2009). So, although the subject in the suit may be stationary, the model may move slowly in the virtual environment. This also occurs while the subject is moving so creates disparity between the horizontal movement of the subject and the horizontal movement of the model. To avoid this the production used a motion capture system based on optics which negates the drift issue apparent in non-optical systems such as the Xsens system (Roetenberg, Luinge, and Slycke, 2013), which can be seen during the demo within Dream’s beyond presentation. While optical systems solve the issue of drift, they do however rely on having line of sight between the markers on the subject and the camera system, which limits the performance area and increases set up time. A non-optical system on the other hand detects motion based on the position of markers on the suit relative to each other and this information is wirelessly transmitted to a computer, therefore not being limited to a particular space. There are strengths and weaknesses to each system and the production team of Dream had to decide which aspects of a motion capture system were more important for their use case. In this instance, and the context of its being a live performance, it was more important to have positional accuracy of the performer within the virtual world than for motion tracking to not be limited to within a specified performance area.

Alongside larger demonstrators such as Dream, there are also smaller scale projects focusing on specific technologies or areas of creative practice. SuperCharging Audio Storytelling: 3D Audio in Virtual Reality is a practice-led research project funded by XR Stories to explore the challenges of designing virtual acoustic environments for room-scale virtual reality experiences (Popp and Murphy, 2022). While also using game engine technology to create a virtual environment, this project focused on creating a new methodology with which to approach sound design for immersive experiences with six degrees of freedom and make the immersive experience more consistent for the user. This is particularly related to non-interactive sound beds and non-diegetic music which can present contradictory spatial cues to that of in-game sound sources. One example of this is the use of water droplet sounds within a cavernous environment, implemented, and compared using both a static Ambisonic bed (a common technique for ambient/environmental sounds) and as audio objects attached to objects within the virtual space and manipulated via, what the author terms, a collision-based system. This system utilises forces (such as gravity) to push objects (such as water droplets), which will eventually collide with either another object or the user. This then triggers a sound event that matches the specific collision velocity, and in the case of the water droplets fades out and destroys the visual object. The sound event excites the environment’s reverberation giving the impression that the event is indeed taking place in the virtual environment. The authors comment that although the static Ambisonic bed containing the water droplets did successfully create a sense of space, it does not respond to the player’s position dynamically. The object and collision-based approach allows the instances of water droplets to become louder and quieter relative to the player’s proximity and be correctly aligned in space and time to the visual objects, as would be the case in a real environment. This is not possible with a static Ambisonic bed due to the randomness of the visual object generation and player movement in space and time.

Tracking Technological innovation

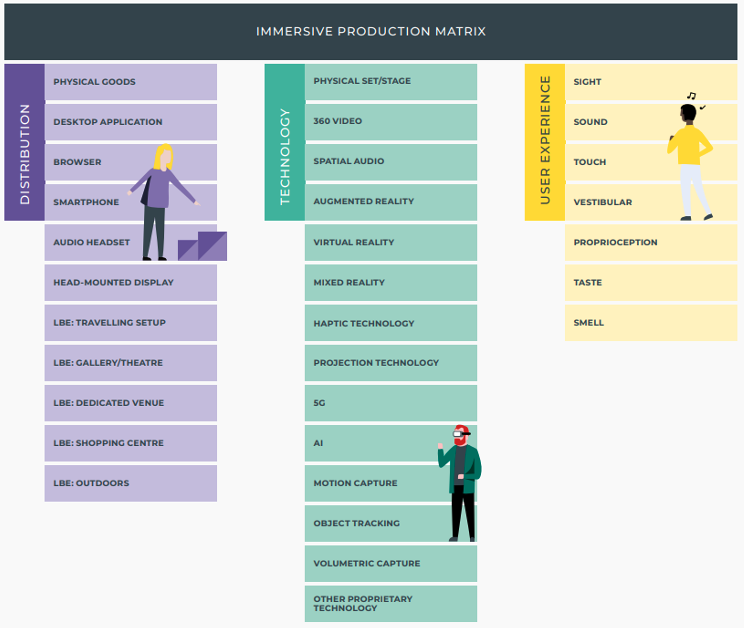

There have been a number of recent reports that have produced different frameworks through which to interrogate various aspects of creative productions. The UK Creative Immersive Landscape 2020 (Digital Catapult, 2020) used, what is referred to as The Immersive Production Matrix (Fig 1) to analyse a production across three dimensions: Distribution, Technology, and User Experience. Each of these dimensions consists of a number of choices with respect to distribution channels, technologies employed, and the nature of the audience experience, respectively. The more space a production occupies across the dimensions the more complex it is said to be to undertake.

Using the matrix, the report analysed the CreativeXR-funded projects across three years (2017-2020) to identify longer term trends within creative prototypes. CreativeXR is a 12-week acceleration programme for the arts and cultural industries developed by Digital Catapult and Arts Council England launched in 2017. Over all three years the most used technologies were VR, Spatial Audio, and a Physical Set. 5G technologies only began being used during the 2nd and 3rd year, which aligns with its introduction to parts of the UK from May 2019 onwards. This does however support the idea that the CIs have the ability to be an early adopter of new technologies, for example 5G Edge-XR was a project launched in August 2020 led by BT collaborating with a range of partners to explore and demonstrate how 5G networks combined with cloud based GPUs can deliver new immersive experiences for users via smartphones, tablets, and TVs. The most popular forms of distribution over the three years were head-mounted display (HMD), followed by location-based experiences at galleries and theatres, and finally smartphones. It is worth noting that while smartphones were the third most used form of distribution over the three years, there was a marked increase in the third year when 5G became more widely available. In terms of user experience characteristics, sight and sound were the most common due to the high use of VR and HMD as the end user technologies. Head Tracking technology also facilitates the sense of space and orientation with some virtual touch enabled through the VR motion controllers. Given the rise of digital at home consumption during the pandemic and the increased availability of 5G it would be interesting to explore further technological trends from 2020 through to 2023 to ascertain whether there has been a sustained reduction in location-based experiences since the economy began to reopen post-lockdowns.

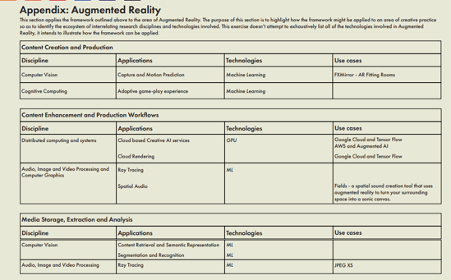

The Creative Technologies Framework was commissioned by the Creative Challenge Team at UKRI, working with Loughborough University London, in order to facilitate the collaboration between technology researchers and creative practitioners. Its purpose is to identify and classify technologies within areas of creative practice, and includes the underlying technologies, technology-enabled applications, related areas of research and disciplines that are applied in creative practice. The framework consists of five categories within which technologies can be placed: Content Creation and Production; Content Enhancement and Production Workflows; Media Storage, Extraction and Analysis; Connectivity; and Presentation and Consumption. While, to our knowledge at the time of writing, the Framework has not been used in any formal capacity, there is an example application of the framework to the area of Augmented Reality as shown in Fig 2. Using frameworks such as this allows the interrogation of both areas of creative practice and of specific creative products.

Rationale

Through the literature outlined within this essay it is clear that the CIs has been experiencing a period of growth from 2017 – 2021 and has been attracting increased amounts of investments year on year. Alongside private investment there has also been government funded initiatives to promote R&I within the sector. With such a strong period of growth and innovation it is useful to track and monitor technological trends to document not only the technologies that are being adopted but also the impact these have on production workflows and audience consumption. Now is a particularly relevant time to conduct such research given the disruption caused by Covid-19 to the CIs and the rise in digital consumption attributed to the pandemic. Through this research we can explore how companies within different areas of the space are utilising these emerging technologies and the impact they are having on their practice.

The insights gained from this research can then be reflected upon in conjunction with current literature to ascertain the current state of the UK’s CIs with respect to their capabilities and readiness in the face of a rapidly changing technological landscape. The outputs of that process could then be used to help inform recommendations to government, research and development, industry & academic partnerships, and workforce training. This research also provides the opportunity to apply the Creative Technology Framework to a set of specific creative outputs, as opposed to a general area of creative practice and recommendations made to update the framework, if appropriate.

Research Questions

This project will be an intensive piece of work focused on a small number of projects, and through the use of semi-structured interviews, alongside a wider review of literature and practice aims to understand what technologies are being used, how they are being applied, and how they are influencing the production and consumption processes of creative experiences by addressing the following research questions.

- What technologies are being utilised throughout different parts of the production process and how do they contribute to the production of the experience?

- How do the technologies enable or contribute to the consumption of the experience?

- To what extent does/could the technology aid scalability relating to

– Reproducibility

– Size of audience

– Scalability of business - How does the technology impact the value of the experience/company relating to

– Reduction in costs

– Increased market access

– Marketable IP

– Additional funding/investment

Next steps

The project will consist of three in depth case studies exploring companies and projects working within different areas of the creative technology space. The next step will be to produce a longlist of possible case study subjects and the criteria with which to conduct short listing and the final selection. The next essays in this series will outline the methodology for the selection process, background on the chosen case studies, methodology for data gathering and analysis, and finally, our findings and recommendations for future work.

August 2022